13 dec 2021

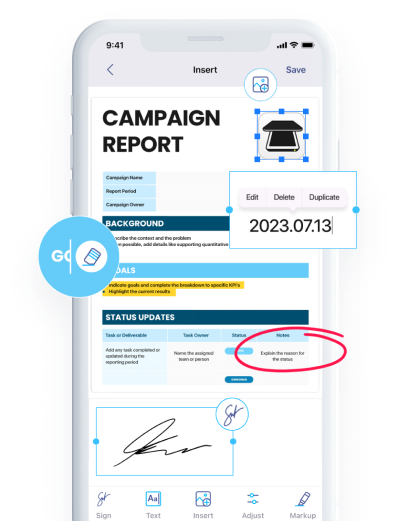

iScanner is #1 scanner in the App Store with 70M users worldwide. In its code has the artificial intelligence, which helps to detect borders of documents and make perfect scans even in difficult conditions. This implementation helps users to make scans automatically in 2 seconds, instead of manual document selection that takes more than 5–6 seconds.

“AI helps to obtain 97% accuracy of scans.”

The ML engineer of the iScanner app Alexandra Duzhinskaya shares how AI makes the iScanner app more handy for users.

Why have you decided to implement the neural network into the iScanner app?

AD: Not only do the users expect a full range of tools for editing and signing documents from the iScanner app, but they also need an app that quickly converts the photo of a document into its tangible copy.

Users rarely think about the conditions under which the photos are taken. Yet that can affect the final result of the scans: perspective distortions, light, color, and texture of the background–we can bypass all these things with the help of a neural network.

The biggest challenge is to locate what the user actually wants to scan. Everything starts with the goal to segment the body and the borders of a document in the image. Most scanner apps can’t detect the borders precisely and automatically, or they make a big number of errors. For instance, it’s not a primitive task for the engine to understand where the table starts and the document ends. That only gets harder if the sheet of paper is lying on a white table or, as it normally goes, is on a stack of papers. With our neural network, the accuracy of document borders detection has grown from 62% to 97%.

Standard tools from classic computer vision just can’t handle all the corner cases, that’s why we trained our own neural network. The main issue we faced was finding enough data to train it. The period of implementation and productization took almost 6 months to achieve the result we wanted: from the dataset annotation to the optimization of model performance on device.

Which tricky cases does the neural network help to tackle?

AD: We can work with damaged documents, images taken under poor light conditions, perspective distortions, multiple docs in the scene, other objects that overlap the main doc, etc. The cherry on the top is that often one image contains a combination of several or all the above-mentioned facts.

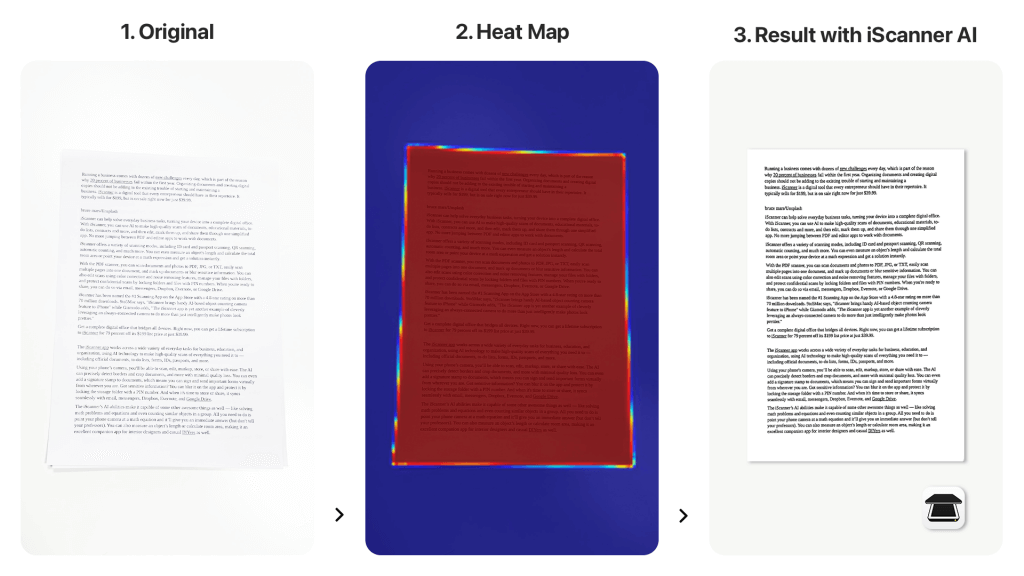

The flow of the iScanner AI recognition

How do you measure improvement with a neural network?

AD: Our goal is to teach the AI to understand where the body of the document is and distinguish it from the background, to be able to fix the perspective afterwards. Basically, for each pixel on the image, we need to predict if it is a part of the document or not. A neural network has been trained to do that. Using the heat map tool, we can visually evaluate whether everything works as expected.

Moreover, as soon as we can tell if there were any mistakes in each pixel’s prediction, we can do the math and collect a bunch of numerical metrics to objectively measure the results.

“At the moment, over 97.3% of the documents from our dataset are detected with an error minor for the human eye.”

The second proof of our success is the user experience itself. In the iScanner app users can choose between the mode of the automatic or manual documents detection. After the integration of our neural network, the percentage of manual cropping has decreased by 58%.